As flash memory has become more and more prevalent in storage from the consumer to theenterprise people have been charmed by the performance characteristics, but get stuck on the longevity. SSDs based on SLC flash are typically rated at 100,000 to 1,000,000 write/erase cycles while MLC-based SSDs are rated for significantly less. For conventional hard drives, the distinct yet similar increase in failures over time has long been solved by mirroring (or other redundancy techniques). When applying this same solution to SSDs, a common concern is that two identical SSDs with identical firmware storing identical data would run out of write/erase cycles for a given cell at the same moment and thus data reliability would not be increased via mirroring. While the logic might seem reasonable, permit me to dispel that specious argument.

The operating system and filesystem

From the level of most operating systems or filesystems, an SSD appears like a conventional hard drive and is treated more or less identically (Solaris’ ZFS being a notable exception). As with hard drives, SSDs can report predicted failures though SMART. For reasons described below, SSDs already keep track of the wear of cells, but one could imagine even the most trivial SSD firmware keeping track of the rapidly approaching write/erase cycle limit and notifying the OS or FS via SMART which would in turn the user. Well in advance of actual data loss, the user would have an opportunity to replace either or both sides of the mirror as needed.

SSD firmware

Proceeding down the stack to the level of the SSD firmware, there are two relevant features to understand: wear-leveling, and excess capacity. There is not a static mapping between the virtual offset of an I/O to an SSD and the physical flash cells that are chosen by the firmware to record the data. For a variety of reasons — flash call early mortality, write performance, bad cell remapping — it is necessary for the SSD firmware to remap data all over its physical flash cells. In fact, hard drives have a similar mechanism by which they hold sectors in reserve and remap them to fill in for defective sectors. SSDs have the added twist that they want to maximize the longevity of their cells each of which will ultimately decay over time. To do this, the firmware ensures that a given cell isn’t written far more frequently than any other cell, a process called wear-leveling for obvious reasons.

To summarize, subsequent writes to the same LBA, the same virtual location, on an SSD could land on different physical cells for the several reasons listed. The firmware is, more often than not, deterministic thus two identical SSDs with the exact same physical media and I/O stream (as in a mirror) would behave identically, but minor timing variations in the commands from operating software, and differences in the media (described below) ensure that the identical SSDs will behave differently. As time passes, those differences are magnified such that two SSDs that started with the same mapping between virtual offsets and physical media will quickly and completely diverge.

Flash hardware and physics

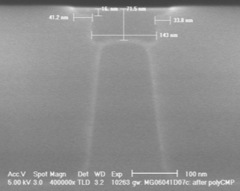

Identical SSDs with identical firmware, still have their own physical flash memory which can vary in quality. To break the problem apart a bit, an SSD is composed of many cells, and each cell’s ability to retain data slowly degrades as it’s exercised. Each cell is in fact a physical component of an integrated circuit composed. Flash memory differs from many other integrated circuits in that it requires far higher voltages than others. It is this high voltage that causes the oxide layer to gradually degrade over time. Further, all cells are not created equal — microscopic variations in the thickness and consistency of the physical medium can make some cells more resilient and others less; some cells might be DOA, while others might last significantly longer than the norm. By analogy, if you install new light bulbs in a fixture, they might burn out in the same month, but how often do they fail on the same day? The variability of flash cells impacts the firmware’s management of the underlying cells, but more trivially it means that two SSDs in a mirror would experience dataloss of corrsponding regions at different rates.

Wrapping up

As with conventional hard drives, mirroring SSDs is a good idea to preserve data integrity. The operating system, filesystem, SSD firmware, and physical properties of the flash medium make this approach sound both in theory and in practice. Flash is a new exciting technology and changes many of the assumptions derived from decades of experience with hard drives. As always proceed with care — especially when your data is at stake — but get the facts, and in this case the wisdom of conventional hard drives still applies.

6 Responses

Hey Adam,

Awesome post. Do you happen to know if the FMA disk-transport module cover SSDs in addition to physical disk drives? I would assume so, since the SSDs look like a drive from the operating systems (and the fault management daemons) perspective.

– Ryan

@Ryan That’s right. It’s the same mechanism as for conventions HDDs.

I don’t like this post because it is a lot of talking and not a lot of proof. If the best you can do is explain the basics of ssd construction, you don’t have a strong case.

The core of what you’re trying to do here is address peoples concerns that ssds off the line are more identical to each other than hard drives. But I don’t think you were successful at all!

"if you install new light bulbs in a fixture, they might burn out in the same month, but how often do they fail on the same day?" Ugh… that sounds like something a random internet person would say, not an expert.

@RC Apologies if I’ve failed to make a strong enough case. When you say that there’s "a lot of talking and not a lot of proof" I assume that you’d like to see a study performed with a number of mirrored SSDs over time that demonstrates that data integrity is preserved. Indeed, that would be a useful datapoint, but insufficient without examining the underlying software and hardware at work. Any one of those three points should be sufficiently convincing as to the safety of mirroring flash SSDs. I welcome the observation of any holes in my arguments.

The tungsten filament in an incandescent light bulb evaporates over time as voltage is applied; microscopic variations in the thickness, uniformity, and quality of the filament determine the overall longevity of the bulb. The same concept applies to the construction of a flash cell where the thickness, uniformity, and quality of the oxide layer (as well as other physical characteristics) determine the longevity. It may be that my light bulb analogy sounds inexpert — indeed analogies are often intended to render concepts more accessible to the lay person — but it’s apt nonetheless.

Good post. I have a few questions. The mapping between logical and physical address spaces seems to be distributing the writes, and hence all hardware inside a single device fails approx’ly at the same time. If you just mirror the content at another device, it would receive every write processed by the earlier one. Though the FTL of the 2nd device places blocks at different physical positions, I think the characteristic of perfectly distributing the writes would mean its hardware also suffers approximately similar erase patterns. And the flash cells more or less seem to show similar physical characteristics with some minor deviations (from what I understood). Hence, wouldn’t it be the case that using another flash disk as a mirror helps in recovering from failures caused by reasons other than wear? I think some data to show that the 2nd device (or parts of it) suffered different erase patterns would add a lot of weight to your post. I agree to your point that one part of physical address space might not at the same time fail at both SSDs. But betting on it would probably be risky. Feel free to point out any glaring mistakes in my arguments, I am new to this field and yet to understand all the intricacies.

@andor Thanks for the comment. You’re point is a good one: the FTLs on both drives are going to attempt to weal-level and assuming the same average cell-reliability, two drives in a mirror will fail around the same time. However, the same is true of conventional hard drives. Both SSDs and HDDs have an expected lifespan, and in each the drive firmware detects its approach as indicated by physical measurements taken on the drive, and reports an expected failure at which time the drives should be replaced. My first point about SMART was perhaps the most salient: SSDs like HDDs can detect and report failures due to age and wear; mirroring is intended to account for bit errors and larger failures unrelated to normal wear.